When we want to work on big files with PowerShell, PowerShell goes slower and slower.

In my last projects, I have to work on Windows DHCP Server logfiles and convert this to CSV or I have to work on big Exchange Server logfiles to get information about what Outlook version is used.

This work was very hard because PowerShell gets very slow with big files.

At this time I start searching for other ways to work on these files and I found an excellent article from Carlo alias HappySysadmin. This article discusses the solutions that we have with PowerShell to work on files. After I read this article I do my own test and I have the same results on reading the files. When I want to do searches|selects|group or so on over the content, after I put all the data in a variable or do output to a grid view….

… it is still very slow. 🙁

My Solution

Now I think about my goal for this work….

My main goal is, to search, select or group on columns from the file and for that PowerShell is to slow to do that in the right time.

Question: What solution do I have for that?

Answer: I can use an SQL Server to store the contents of all logfiles! After that, I can make my selects|searches|group with a SELECT on SQL server level or do that with PowerShell in SQL server.

OK, the solution is clear and I think I can use this solution for every logfile and each size of the file.

Requirements:

- MS SQL Server and a user with the database creator role

- You need a Workstation or Server with enough CPU and Memory resources

- You need to know the structure of the file

- Is the file Convertable to comma delimited

- Contains the line “every line in each file” characters that prevent the separation “I mean a comma at a position where you don’t want to split columns”

Script

I create a script that read all files from a directory and convert each line to the format that is compatible to push it as an INSERT INTO SQL statement. The script also defines the SQL connection string and many variables that we use later in the script.

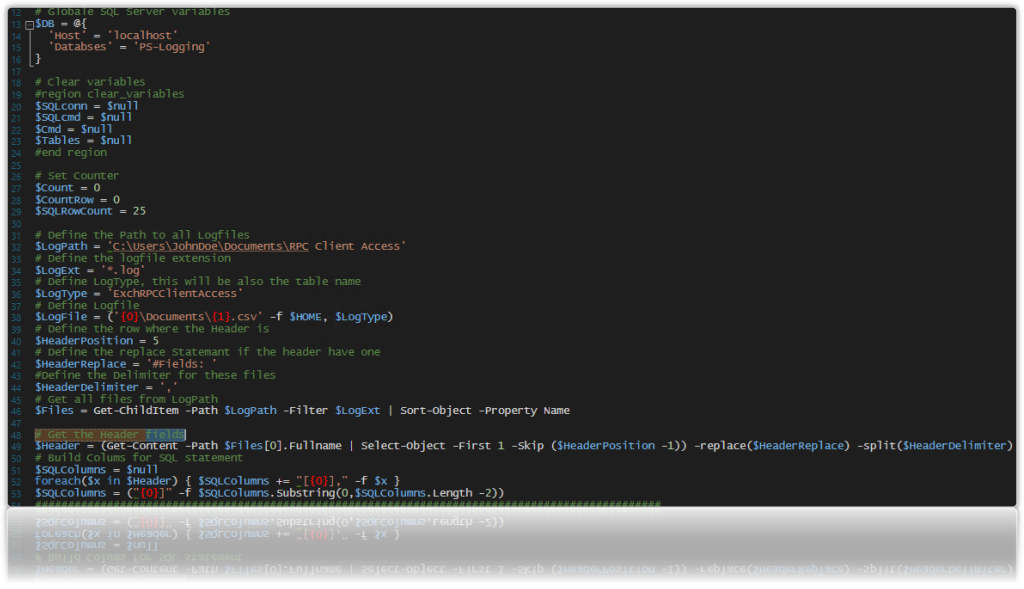

I explain the script header, here:

- Line 13-16: Hashtable for SQL Server connection, the database name should a name of your choice

- Line 20-29: Some variables

- Line 32: Path to the directory where the log files are

- Line 34: LogFile extension

- Line 36: LogType defines the name of the SQL Server table

- Line 38: Csv logfile to save the filenames for files that are processed

- Line:40: Defines where the header columns are

- Line 42: The define the beginning string of this row that we want to replace

- Line 44: Delimiter of the header line

- Line 46: Get all files from a directory

- Line 49: Get the header from the first file

- Line 51-53: Build an SQL column string for later INSERT INTO SQL statement

The bold and italic values should be changed and must match your requirements.

1. In this section, I build the SQL connection and you can see that I connect to the “master” DB, because my DB is not created now.

# SQL Connsction

$SQLconn = New-Object System.Data.SqlClient.SqlConnection

$SQLconn.ConnectionString = ('Data Source={0};Initial Catalog=master;Integrated Security=SSPI;' -f $db.Host, $db.Databses)

$SQLconn.Open()

$SQLcmd = New-Object System.Data.SqlClient.SqlCommand

$SQLcmd.connection = $SQLconn2. Now I build a SELECT statement to check if the database is available. If the database is not available, we create the database.

# First check Database Table

$Cmd = @'

USE master;

IF DB_ID('ARGDatabase') IS NULL

CREATE DATABASE [ARGDatabase];

'@

# Replace variables

$Cmd = $Cmd -replace('ARGDatabase',$db.Databses)

# Check Database exists

$SQLcmd.CommandText = $Cmd

$null = $SQLcmd.ExecuteNonQuery()3. Now we have to check if the database table is available and create the table if not exist.

$Cmd = @'

USE [ARGDatabase];

IF EXISTS(

SELECT *

FROM INFORMATION_SCHEMA.TABLES

WHERE TABLE_NAME = 'ARGTable')

PRINT 'Table exists'

ELSE

CREATE TABLE [ARGDatabase].[dbo].[ARGTable](

[ID] [int] IDENTITY(1,1) NOT NULL,

ARGStables

) ON [PRIMARY];

'@

# Build Columns

foreach($i in $Header) {

$Tables += ("[{0}] [varchar](1024) NULL," -f $i)

}

# Remove the last comma

$Tables = $Tables.Substring(0, $Tables.Length -1)

# Insert tables in $Cmd

$Cmd = $Cmd -replace('ARGStables', $Tables) -replace('ARGDatabase',$db.Databses) -replace('ARGTable',$LogType)

# Check SQL Server if table exists, if not create the table

$SQLcmd.CommandText = $Cmd

$null = $SQLcmd.ExecuteNonQuery()4. the next step is to work on all files and read the files line by line and save each line content to SQL database. We read every line and prepare the line and remove unwanted characters. As you can see I build one INSERT INTO statement and put to it many VALUES lines. That’s because I do a long test with performance, more about performance at the end of this article.

# Load all files in MSSQL Database

foreach($i in $Files)

{

if ($FilesDone.FileName -eq $i.Name) {

# Do noting because we run on that file already

} else {

# Build the Sql statement

$Cmd = ('INSERT INTO [{0}].dbo.[{1}] ({2}) VALUES ' -f $db.Databses, $LogType, $SQLColumns)

[System.IO.File]::ReadLines($i.FullName) | Select-Object -Skip $HeaderPosition |

ForEach-Object {

# Increment the counters

$Count++

$CountRow++

# Set first character for sql statement

$Line = "'"

# Replace unwanted characters

$Line += $_ -replace("'") -replace(", ") -replace('"') -replace($HeaderDelimiter,"','")

# Add the last character

$Line += "'"

# we build a number of $SQLRowCount row to insert with one shot to sql

if ($Count -lt $SQLRowCount) {

$Cmd += (' ({0}),' -f $Line)

} else {

# Add line

$Cmd += (' ({0}),' -f $Line)

# Cut the last comma

$Cmd = $Cmd.Substring(0,$cmd.Length -1)

# Add rows to table

# Check SQL Server if table exists, if not create the table

$SQLcmd.CommandText = $Cmd

$null = $SQLcmd.ExecuteNonQuery()

# Rebuild the statement

$Cmd = ('INSERT INTO [{0}].dbo.[{1}] ({2}) VALUES ' -f $db.Databses, $LogType, $SQLColumns)

$Count = 0

}

}

# If the last INSERT INTO has only this line, irnore it.

if ($Cmd -ne ('INSERT INTO [{0}].dbo.[{1}] ({2}) VALUES ' -f $db.Databses, $LogType, $SQLColumns))

{

# Add last lines

# Cut the last comma

$Cmd = $Cmd.Substring(0,$cmd.Length -1)

# Add rows to table

# Check SQL Server if table exists, if not create the table

$SQLcmd.CommandText = $Cmd

$null = $SQLcmd.ExecuteNonQuery()

}

# Write filename to logfile

$i.Name | Out-File -FilePath $LogFile -Append

}

# Display the file to console, so we know how we are

Write-Host ('Filename: {0} :: Rows INSERT: {1}' -f $i.Name, $CountRow)

}

# Close SQL connection

$SQLconn.Close()

################################################################################################

# End

################################################################################################

Performance

I do many tests with the right values to read the files and to save to the SQL database and I think I found a good setting in my environment.

| Description | Duration |

|---|---|

| Number of files | 15 |

| Rows overall | 389.821 |

| Size of all files | 132 MB |

The values of this table are small, but this is only for the performance tests.

| Typ | 50 rows | 25 rows | 10 rows |

|---|---|---|---|

| [System.IO.File]::ReadLines() | 1.74 | 1.25 | 1.46 |

| Get-Content | 1.86 | 1.36 | 1.59 |

You can see I use at the first tests the [System.IO.File]::ReadLines() function to read the files and the table show how many line I save to SQL database at one shot. The values below the row count was counted with Measure-Command and is the value of total minutes.

In the end, we can see that the first tests are faster than the test with Get-Content.

My last test was with all files (115 files) and a total row count of 3.100.000 and it took nine minutes to proceed. This is 5740 rows per second and I think this is not too bad.

Have a nice day

Arne